Coronavirus at risk of AI outbreak.

There are some 75,000 plus peer-reviewed research papers on studies of coronaviruses.

Australia’s ‘ABC Online’ highlights what few of us knew about Florence Nightingale; that she was a statistician more than a nurse.

“Upon arriving at the British military hospital in Turkey in 1856, Nightingale was horrified at the hospital’s conditions and a lack of clear hospital records.

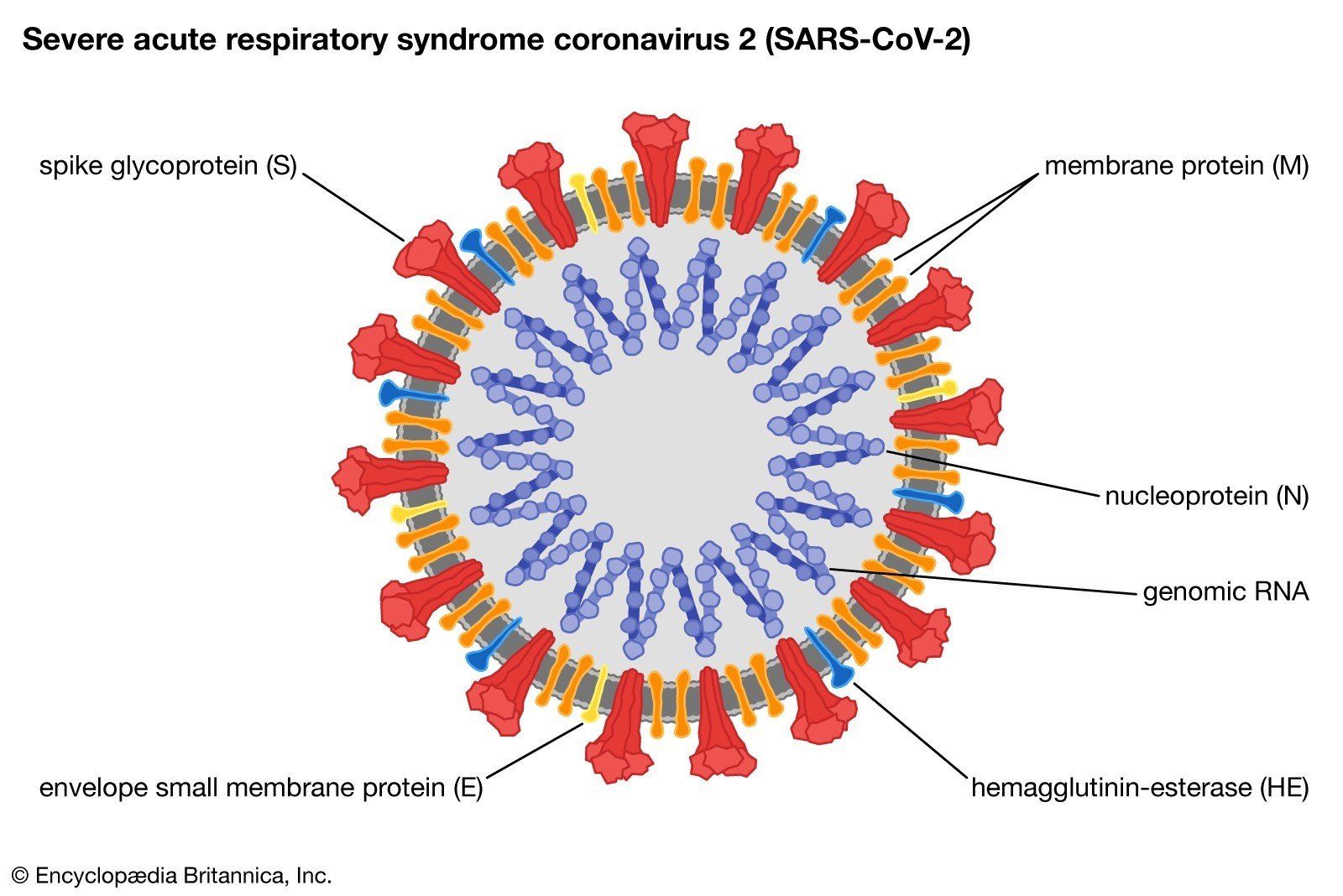

Coronaviruses are a family of viruses. There are many different kinds, and some cause disease in animals. Coronaviruses were first discovered in the 1930s when an acute respiratory infection of domesticated chickens in the US was shown to be caused by infectious bronchitis virus (IBV).

Animal coronaviruses can “spill over” into humans. Human coronaviruses were discovered in the early 1960s when it was found that two new viruses caused a cold in humans. Later, in 1967 it was discovered, using electron microscopy, that these two viruses,B814 and 229E, were related to the 1930’s IBV “bird virus”. Soon after, these three were shown to be related to a fourth, the OC43 virus, and the fifth, mouse hepatitis virus, and they came to be known as coronaviruses.

There are seven coronaviruses that infect human beings. The most recent discovered is the SARS-CoV-2, (the new coronavirus that causes coronavirus disease 2019, now called COVID-19).

COVID-19 is a highly infectious disease. But when it seeks and fails to replicate in normal body cells it can change its genetic footprint and “mutate” into a new virus. Therefore, the risk is that a new and more lethal human coronavirus could emerge in the future, especially if we lose control of COVID-19.

Hence there is a sense of urgency in better understanding the coronaviruses, and finding an anti-viral treatment (vaccine) for them.

Her key recommendation to the British military commanders — Handwashing and social distancing!

The story of Artificial Intelligence started 64 years ago, in the 1956 Dartmouth Summer Research Project on Artificial Intelligence. It was organised by John McCarthy, then a mathematics professor at the College.

In his proposal, he stated that the conference was “to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

The idea of building an intelligent computer was met with one disappointment after another; so much so that regular setbacks led to periodic “AI winters” of despair.

In 1998 there was a breakthrough. Ten years earlier, a recent Oxford University graduate, Tim Berners-Lee, had invented what became known as the World Wide Web, what most of us refer to as “the Internet”.

In 1998 he proposed an incomplete architecture for a web of intelligent computers communicating with each other across the Internet. He called it a “Semantic Web”.

He proposed that the computers would be “intelligent” because they would understand the meaning of data that they exchanged without human programming; they would understand the “semantics” of their messages so that they could communicate intelligently, a little like humans do, at a basic but effective level.

What Berners-Lee “dreamed” of required a significant departure from conventional computing, a different way of structuring data to engineer it into information that a computer could understand by itself, without the need for computer instructions provided by humans. It was a breakthrough in the then forty-year-old computer science field of “Knowledge Engineering”.

There are some 75,000 plus peer-reviewed research papers on studies of coronaviruses.

There is substantial additional research material available in documents and social media.

Each one of these and other sources of coronavirus data is equivalent to one of Florence Nightingale’s datasets; isolated pieces of what could be information critical to the development of treatments or vaccines.

Florence tried to make sense of three datasets; today researchers have to investigate the contents of tens of thousands. If a single human being could read all of those, and remember their content, they might find that five of the documents had drawn conclusions that suggest something no-one had thought of.

We know that such a feat is impossible for a large group of clever researchers, let alone one.

Computers, on the other hand, can read at thousands of times the speed of a human.

They can “remember” everything they read.

This speed-reading utilises a technique called “Deep Learning” that can extract the concepts discussed in a digital document, where a highlighted concept might be one of the proteins found in a coronavirus membrane, or an RNA mutation pattern.

Artificial Intelligence utilises sophisticated computer knowledge stores called “Semantic Graphs”. Deep Learning can reference these fancy knowledge stores to enrich the extracted concept and store it as a concept within the Semantic Graph, the heart of any Artificial Intelligence system.

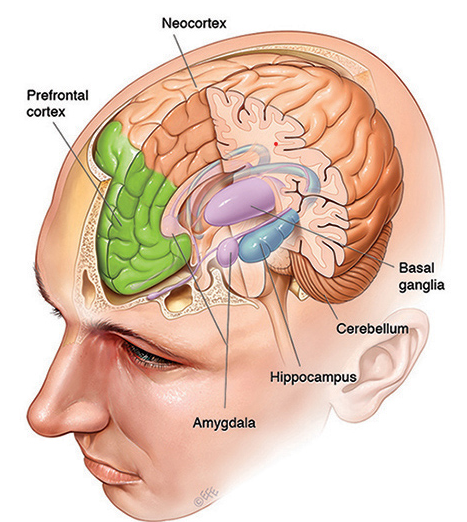

From their capability to store and find relationships in massive amounts of data, Artificial Intelligence systems can utilize the Semantic Graph to reason, or to “infer” new knowledge, based on finding hidden relationships between concepts and/or their properties (metadata).

While this power of reasoning is currently only at the level of an infant human child, the closest we currently have to mimicking human brain behavior, it is compensated for by the amount of data it can access and the speed at which it can find these hidden relationships from which it can infer new information.

Of course, any new knowledge proposed needs to be referred to a highly intelligent human, such as an epidemiologist.

The potential for a combination of highly expert medical investigators and Semantic Computing techniques has been known since 2008 when Oxford University published its seminal findings on the Cancergrid study.

It was largely overlooked because Semantic Computing, as it has become known, represents a new paradigm in thinking about data structures, is based on complex mathematical concepts, and other techniques appeared easier.

The more recent advances in Deep Learning extraction of knowledge from digital research documents can now be added to the mix, representing a substantial advance in Artificial Intelligence support for subject matter experts of all kinds.

The need for AI in medical research has been drawn into the spotlight by COVID-19, with Semantic Graphs the star of the show.

This is coincident with acceptance that

1. A computer can’t be intelligent if it can’t store and access knowledge in a way that it understands the knowledge itself, rather than following instructions provided by a human

2. A new generation of “graph” databases can enrich descriptions of things so that a computer can “understand” them better

3. Semantic Graphs provide the most sophisticated graph model for representing knowledge in a computer system

4. Semantic Graphs are essential for “robust and automated artificial intelligence” (Forrester Research and others)

5. Semantic Graphs will become as prevalent in large and medium enterprise informatics infrastructure during the 2020’s as websites became in the 1990’s as a core enterprise technology.

Already, it is the vendors of Semantic Graph based solutions that are prominent in support of the epidemiologists studying COVID-19.

We are working with the three leading Universities in Sydney to improve the extraction of knowledge from multi-lingual research papers. (Important since Europe and China are leading sources of research data on coronaviruses).

Our Semantic Graph will ingest that extracted knowledge and it will be made available to international collaborations of coronavirus investigators in a form that is a considerable improvement on what they have today.

It is the closest thing we have to a human researcher reading 75,000 research papers, remembering everything in them, and searching for relationships between research findings to highlight potential areas for closer study.

Hopefully other companies like ours can collaborate to share Semantic Graph data and insights through a feature of Semantic Graphs known as “linked open data”, to speed up the processes of research collaboration and near real time publication of important findings.